How to plan, launch and analyze web user testing

User testing is a useful qualitative research tool and a generally great way of providing valuable insights into how real users interact with a website or product. It helps designers or anyone who works in UX or CRO identify any issues or pain points that customers may encounter, leading to a better overall user experience.

In this article, we will go over how to plan, launch, and analyze web user testing, including the tools that are good for user testing, why it can be expensive, and how much data/testers you need.

Tools

One of the first things to consider when planning web user testing is the platform that you will use. There are a variety of tools available, each with its own strengths and weaknesses. Most of these tools work by the same logic: they create a pool of testers you can recruit from, or you can set up the test and recruit your testers from somewhere else. This is usually a much cheaper option but requires more effort.

Which option to go with is highly dependent on whether or not you have a large enough email list or enough traffic on your website to be able to recruit your own testers in exchange for some sort of incentive. Even if you do, there are some downsides to this approach:

People who are already familiar with your brand and website will already understand the experience and won’t have as much trouble getting through the tasks compared to new users. As a result, you might not get as many insights as you would with an external panel with fresh eyes.

You’ll have to come up with a reward or incentive that is valuable enough to motivate them to spend a half hour or more going through your task list, without breaking the bank. This might take some trial and error until you find a formula that works.

It’s pretty tough to motivate people to do something they don’t understand, so you’ll have to explain the basic concept of user testing since most of your users won’t already be familiar with it.

The attention of the people on your email list and visitors to your website is a precious resource, and you might want to preserve it for other purposes that are more relevant to your existing users like customer surveys, on-site polls, or promotions and campaigns.

Because of these challenges, most companies tend to pay for full-service platforms and make use of their pool of testers. The logistics and rewards for tests have been fleshed out and you’ll be able to get your results back very quickly most of the time if your targeting isn’t too nitpicky. Another pro for the full-service platforms is that most of them are willing to compensate for bad tests. This means that if a tester's audio quality is really bad or they skip most of your tasks, most respectable platforms will give you a free test in return to run it again.

Within the tool, you usually have the option to launch a moderated or an unmoderated test. For the purposes of this article, we’ll be focusing on unmoderated testing since this is already a lot of work, and having a moderator present for each session adds to that significantly and creates the need to schedule the sessions.

A good testing platform will also offer the capability of doing tests on different devices like desktop vs mobile. This is important because the user experience between mobile and desktop devices is fundamentally different, so you’ll want to research those separately to draw any reasonable conclusions.

Some tools to check out for user testing:

Why is user testing so expensive?

One question that often comes up when considering user testing is why the cost can be so high. There are a few reasons for this:

Recruiting testers can be time-consuming and requires a certain level of effort to find the right people. These companies have probably put years into building huge pools of testers that you can easily recruit from.

Paying testers for their time and providing incentives definitely adds to the cost. A lot of these tools have also gotten very sophisticated, offering lots of different features for analysis. All of these features can be really costly to build.

The current climate in the customer research SaaS industry is also very geared toward enterprise-level clients. A lot of these companies don’t focus on marketing to small agencies and freelancers anymore because they know large enterprises are very UX driven these days and can afford to throw a lot of money at tools.

The pricing models of the tools vary so much that it’s really difficult to estimate a ballpark figure of how much a user testing project will cost you. Some tools allow you to “pay as you go” starting from around 30 dollars per test, but then there are usually time limitations on how long the test can be, etc. Some platforms require demos before they tell you anything about pricing, some require a monthly or yearly subscription fee on top of charging you separately for each test, and sometimes you need to purchase large buckets of credits at a time.

I would even go so far as to say that one of the more annoying and complicated parts of launching a good user testing program is to figure out which tool to use just because the pricing models have gotten so complicated and a lot of them are designed to lock you in for a longer period of time. A little bit of research and comparison to figure out the best tool for you is still worth it for the insights you will get from your user testing project.

How many testers do I need?

The answer to this question will depend on your specific goals and the complexity of your website or product. In general, it is recommended to test with at least 5-10 testers to get a good understanding of any issues or pain points. Read through this great article by Jacob Nielsen on “Why you only need to test with 5 users” before you run and launch a very expensive 100-user test that will take ages to analyze just because someone told you that you need statistical significance.

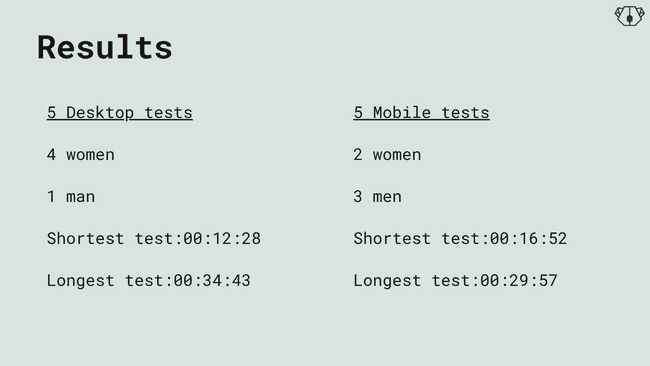

The other aspect to consider with user testing, as just mentioned, is the analysis. An average good quality test that takes the user through the entire web funnel will take around 20-40 minutes. If you launch 5 mobile tests and 5 desktop tests and they average a half hour each, then this will add up to 5 hours of watch time at least.

To be fair, most user testing platforms offer the option to watch the videos at different speeds so you can play them all on 1.5 or 2x speed to make life easier, but the reality of this kind of analysis is that you need to pause, take notes, take a break, really deeply think about what you saw and what it means, and then usually compile a report that somehow brings this project together and conveys the findings to the people that didn’t watch these videos but still need the insights from it.

Ok, so how do I actually plan out the tasks for the testers?

Before you start putting together the task list, spend a bit of time figuring out the overall purpose of your user testing project. Are you trying to understand the entire shopping flow, or do you need to focus on a specific area on the site that has shown to be problematic? Are you trying to inform an experimentation program by gathering ideas for new test hypotheses? Perhaps it's something more unique to your business case entirely?

When planning the actual tasks for user testing, it is important to keep in mind the specific goals of the test and the overall user journey. Some specific tasks to consider might include navigating to a specific page, completing a form, or purchasing a product. It is also important to include both specific and general tasks to get a well-rounded understanding of the user experience.

Some sources will tell you to create arbitrary success metrics to decide whether or not a tester “passed or failed” the test. This to me seems extremely counterintuitive and defeats the whole purpose of a qualitative research project. Your purpose should be to find out what you don't know. There should be no wrong answers for the testers. As mentioned you should absolutely include more specific tasks for the testers if there is an area of the site you're specifically interested in, but the overall sentiment should be as open-minded as possible. It’s way more interesting to see how and why a user failed a task than to count the number of users who failed.

Most platforms give you the option to either require testers to have cameras on or off. Unless you’re super into reading facial expressions, there’s not much value in having the camera on for a moderated test. Make sure you emphasize in the intro though that you want them to narrate absolutely everything they’re doing, to “think out loud” and that there are no wrong answers.

Here’s a list of potential tasks you might want to test on a typical e-commerce site:

Look around the home page. Please explain out loud what this company does and what this site is about

(Note: Impression tests are highly debatable in this industry. Some people love them, some hate them. We find that most of the time it’s still a good simple task to ease the testers into the testing flow and get some information about whether or not your home page value proposition is clear enough.)

Browse the different product categories and find a product you genuinely like. Explain your thought process behind finding the perfect product for you. Add that product to your cart.

(Note: This is an example of a general task where you can let the tester explore on their own and see what comes up. You might think you have a good understanding of how new users discover products on your site and where they navigate to from your home/landing page, but this type of task is good at highlighting what you don’t know.)

You've decided you want to buy this specific product (insert a link to a PDP you want to test here). Go through all the product options - color, size, etc. and add the version you want to your cart.

(Note: This is an example of a more specific task. You might want to choose your highest converting or just highest traffic PDP to get some extra information about how people interact with it. Some companies we work with also have differences in layout for their product pages so you can add a couple of these types of tasks, directing testers to all of these pages you need extra information about.)

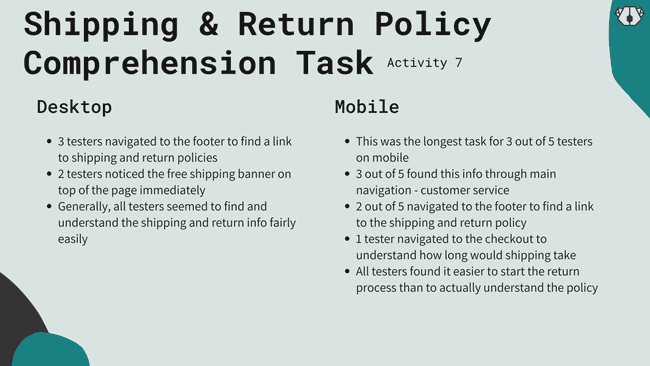

Try to find information about the company's shipping and return policies. How do you feel about ordering from this website and possibly returning the product based on the shipping and return policy? How much time will shipping take?

(Note: Shipping and returns are by far the biggest hurdle for most e-coms. Even when you think the policy is obvious enough, there will always be hesitations around this, especially if you ship internationally. We often find that many customers navigate to check out immediately to find this info rather than going on a wild goose chase through your navigation. This is a huge friction point and should be tested around consistently to make this information as clear as possible. Lots of conversions are lost every day to unclear and ambiguous shipping policies.)

You are now ready to complete your order and check out. First, you want to make sure everything makes sense in your cart. Navigate to the cart and add a second product.

(Note: Whether or not you need this task depends on the flow of your site. If your cart is stupidly simple or you mostly direct customers to checkout without opening the cart anyway, you might not need to include a cart-specific task.)

Start the checkout process and fill in the shipping information with fake made-up information. Your task will end when you get to the payment step.

(Note: First things first- you don’t want to ever give the impression that the tester is supposed to fill out your checkout with their actual cc info and make an accidental order. Make that painfully clear. This task will also again heavily depend on what your checkout process looks like. If you have a standard Shopify checkout then there are usually no major surprises there. The main thing that might cause confusion is again the information about shipping. If you have a custom-built checkout flow, you might see more issues come up within this task.)

What was the most frustrating part about shopping on this website? If you had a magic wand, what would you change about this website to make it better?

(Note: It’s good to close with a general task that would make the tester summarize their experience, and let you know what were the tough parts and what they would change.)

The shorter your funnel, the harder it will be to do proper user testing. If you work with a SaaS website instead or any type of business where the signup flow is incredibly simple, you might benefit more from exit polls/on-site surveys that are triggered to launch right before the customer is about to abandon the site.

What about targeting?

This is kind of a tough one to balance. On the one hand, you want someone who could at least be a potential customer for your brand, but having a tester who is already familiar with your brand and site is not really helpful either. Often we create the first task as a short recognition task. We ask the testers to look around for a couple of seconds and describe what the company does, sells, and stands for. This is also called an impression test and the purpose is to see if new customers are able to grasp what you’re offering or if your value prop, landing, or home page needs a lot more clarity.

Most testing platforms will let you choose from a variety of targeting options such as location, age, level of income, education, and gender. This can help ensure that you are testing with a diverse group of users who represent your target audience. It truly does depend on your demographic.

If your products are exclusively targeted at women and you know that you have no male customers, then there's no point to test with male testers. If you sell luxury products then it would be wise to select an appropriate income level within the targeting. If your core demographic is seniors, then getting a bunch of teenagers to user test your site isn’t going to be very helpful.

Most platforms also offer the option to add something called the qualifying question. This means that if you can't find the right options within their targeting rules you can set up a disqualifying question yourself. Let's say your company sells dog food for example and there is no targeting rule for pet owners, you can launch the test with the first question “do you have a dog?”.

Outside of cases like these, we recommend not trying to target your exact ideal customer profile. User testing isn’t meant to gather perceptions or opinions, it’s more about observing how people interact with your site. There are better ways to deepen your understanding of your customer’s mindset, like customer surveys, interviews, or even on-site polls, which are done with your actual customers and users rather than people recruited from a testing platform.

User tests are done, how do I analyze them?

There are two mental layers you need to apply while doing user test analysis. The first layer is noting everything that the tester says, the other is noting everything they actually do. Spoiler alert - the two don’t actually always add up. While user feedback can be valuable, it’s important to also observe actual behavior to get a complete understanding of the user experience.

Most humans are incredibly biased, and that includes your user testers. Testers may want to be liked or may feel pressure to perform well because they are getting paid for their time. We see this all of the time. They can barely get through or understand the task, and they’re struggling a lot, but the thoughts they choose to articulate in the moment are the opposite and paint your site in a non-problematic positive way.

To mitigate this, you might find it helpful (or just interesting?) to go through the FAQ for testers and understand the payment terms and expectations. You might also end up with a couple of “serial testers” as we call them. These are the people who try to do this type of testing for a living and spend their days on these testing platforms. They’ll try to get through the test as fast as possible, leaving you with little to no actual insight about your website experience. In this case, you should absolutely reach out to the platform support again and get your test replaced.

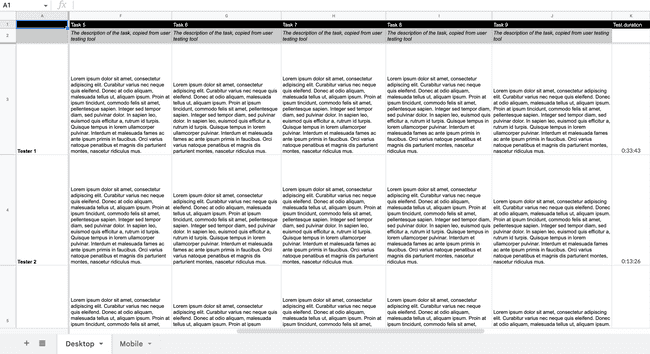

Some platforms have the feature of adding notes and annotations within the app, but you can also just take notes on whatever notes app you are comfortable with. I personally choose to use a Google Sheet and the unclean notes look something like this:

A separate sheet for each device, testers in the first column, and each task in the first row.

When taking notes during the user testing analysis, it is important to write down everything you see without censoring yourself. This will help you capture any issues or pain points that users may encounter. This type of template will give you the opportunity to look at issues for each tester per task and later compare the common patterns within each task. For example, if 4 out of 5 testers didn't get through checkout, you can go to your notes in the checkout task column, and it will be immediately clear.

When analyzing the results, it is helpful to look for common patterns and issues as well as singular issues. Even if a specific issue only arises with one tester, it is still important to take it seriously as it may be indicative of a larger issue. It is also important to give testers enough time to complete the tasks, typically 30-45 minutes. This will allow them to take their time and not feel rushed through the process. To the analysis sheet, you can add the duration of each test for more clarity. I also like to note which was the longest task for each tester individually.

Your unfiltered notes are usually not enough for a presentation or a report though that can clearly communicate the overall issues that came out of this research method. As a last step of the analysis, you can put together a report that highlights the major issues and breaks down everything by task. A couple of examples from the full Koalatative User Testing Report:

It’s good to have a breakdown of the gathered results and remind everyone why you didn't launch a hundred tests as we mentioned in the beginning.

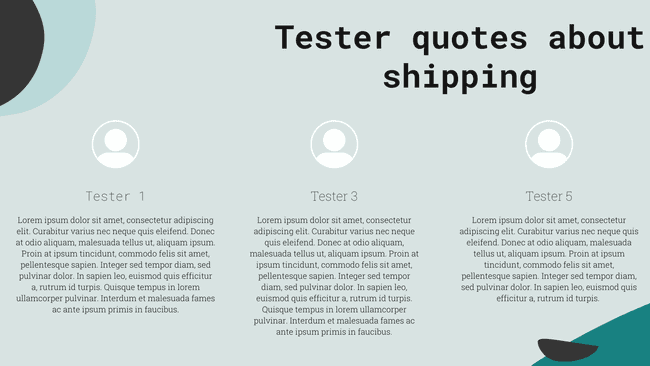

Every once in a while testers will spit out pure gold as direct quotes and answers to the questions that made you start this project in the first place. Most user testing platforms now offer transcription of the video as well so it’s fairly easy to extract those quotes and add the best ones to your report to really drive the point home. Here's an example of how we communicate the best quotes in our report:

Each task or activity that your testers go through should have its own section in your report where you break down the issues by device. Keep in mind that we’re not trying to find significance here by saying 3 out of 5 doing something is worse than 2 out of 5 and so on. This is just a useful simple way to break down the issues and communicate them to the people in your team who didn’t watch 5 hours of tester videos. Here's an example:

For added value, you can also record short clips of testers struggling with something specific that you want to mention and add those to your report as gifs.

Conclusion

User testing can be a valuable tool for uncovering blunders in the user experience of a website or product. By using the right tools, crafting the right set of tasks, and carefully analyzing the results, you can identify and address any issues or pain points that users may encounter. It’s definitely not the easiest form of qualitative research - this is also one of the reasons why a lot of companies don’t invest in this method - but by taking the time to properly plan, launch, analyze, and present the results you gathered through user testing, you can ensure that your website or product is the best it can be for your customers.

Want to know what we're up to?

We'll send you an email when we publish new content