What you think CRO is vs what it actually is

Updated on January 22, 2024

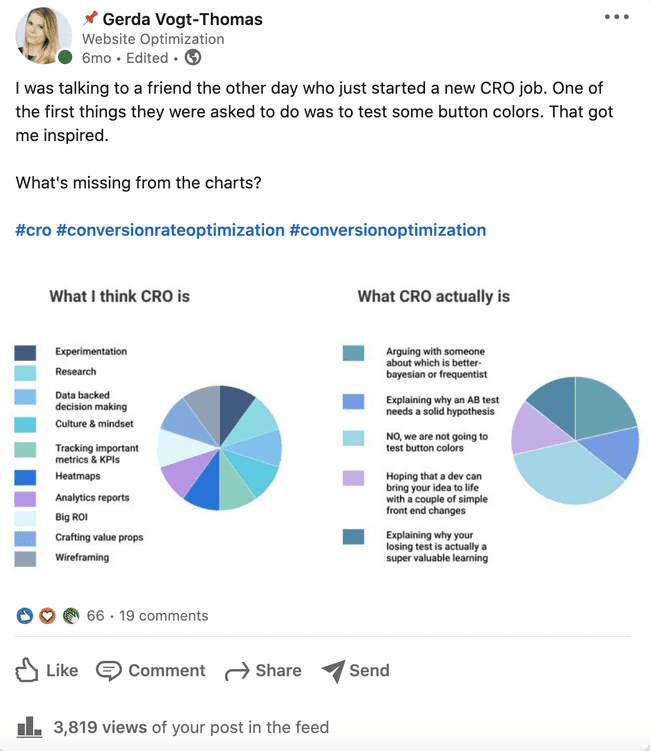

A couple of years ago, I wrote this LinkedIn post:

After hearing this story from a friend who was asked to test button colors at her new CRO job, it almost seemed comical since this has become a meme of sorts in the CRO community. And yet clients, as well as practitioners, keep wanting to try this out for some reason.

That whole discussion made me think of the realities of a CRO job and since so many people seemed to agree with the pie chart, I decided to break these issues down even further based on my experience. So let's get into it.

Arguing with someone about which is better: Bayesian or frequentist

This is a debate that will most likely outlive all CRO practitioners. Most people I’ve met in the industry have trouble explaining the difference off the top of their heads. There are people who feel very strongly about either one or the other, and there are people who don’t think it really matters at all and say they’re just different methods for getting to the same result. But somehow this topic always seems to arise anyway when testing is involved and it’s hard to agree on the interpretation.

So how the heck should you believe anyone's test results then? Well, I guess the short answer is - it depends. AB test statistics are the same statistics used in medical trials, but if you’re not testing on human lives, then you probably have more room for flexibility than 99% significance and overall methods used. The most important thing is to get on the same page about data interpretation with your team, client, dog or whoever you specifically need to work with.

Explaining why an AB test needs a solid hypothesis

This sort of ties in with the button color testing meme. Have you ever been to a meeting and someone says ok I want to test this, you ask them why, and they can’t really explain other than they think it would be better? Yeah me too.

Think about it this way: with your experiment you’re trying to answer a question i.e. if I do X then Y will happen. So the answer will either confirm that yes that is in fact the case or no, sorry buddy, better luck next time. The more data points you have to support your hypo the better off you’ll be. That is the whole purpose of conducting the experiment, to find the answer to the question. If you forget to ask/set the question in the beginning, it’s going to be really hard to determine what the answer is after you wasted 3 weeks of your life checking in on that conversion graph in your testing tool.

No, we are not going to test button colors

I do think that this one just comes down to some weird personal, emotional or maybe even biological reasoning. Humans feel really strongly about colors even when it doesn’t bring any real value to the situation.

Think about buying a car, people will pay more to get the color on their car that they want even though it will in no way influence the car’s performance. Same with websites, although here it will just waste your time testing it on buttons because the visitors in your test will most likely prefer another color and on a website with buttons it really comes down to visual hierarchy and contrast i.e. how easily people are able to notice the thing you want them to notice.

I worked on a client's website once whose main color is orange; I really hate orange if it’s not on an orange. It didn’t matter because their tests won anyway and their client base really associated it with the brand.

Hoping that a dev can bring your idea to life with a couple of front-end changes

Now this one takes a lot of time and communication with developers to get right. Estimating ease of implementation for tests (or any new website feature) is a separate art form.

Two common scenarios come to mind with this:

Online forms - an interesting test around forms, depending on your signup flow, is breaking up a field-heavy step into two different digestible steps.

You can ask low effort information first to not overwhelm your visitor, and UX wise, it’s fairly easy, you just split up a step in a reasonable way and design a new screen, but the problem comes with validating the information which happens in the backend.

For example, making sure that the email used is a real one. So all of this is of course doable, but if you work as a consultant with external devs who have no business in the clients' back-end, then getting this set up on the client’s side can be painful.

Tests around pricing - similar story in ecom or any website that sells something and wants to try out different pricing strategies. All pricing info lives on the server side so don’t expect your devs to tweak a couple of dollars in the testing tool and be good to go.

Explaining why your losing test is actually a super valuable learning

I do believe that there is a lot of value in losing tests. If it’s a clear loser then you can confidently say the change shouldn’t be rolled out rather than keep guessing and lose a lot of money or leads in the long run.

Additionally (if you have the sample for it), don’t forget to look into segments. Just because the test was a loser in the general public's eye, doesn’t mean that maybe some specific segment couldn’t find value in it. New vs returning visitors behave differently, 20 year old vs 60 year old visitors behave differently, visitors from ads vs visitors from organic search behave differently and so on.

Final thoughts

There are a lot of frustrating (or funny? depending on how you look at it) misconceptions about CRO and the good people of our industry are doing their best to debunk and educate on these every day.

I also find it really interesting that even though there are clear benefits to CRO, the practitioners still face these very common objections or ideas inside their organizations or from the clients that they work with.

As I mentioned under the button color theme, that originally brought me to write about this topic, it all most likely comes down to psychology as does everything in marketing. Confirmation bias is a beast and accepting ideas that we don’t believe in personally threatens our identity. It shouldn’t, new information can only make us better optimizers.

Want to know what we're up to?

We'll send you an email when we publish new content